Sign Language Recognition

For the Stanford CS229 Machine Learning final project, I worked on sign language recognition. The motivation was that immediate feedback on sign language gestures can greatly improve sign language education.

The dataset consisted of 1800 self-produced webcam images of 3 people signing 25 American sign language alphabet signs.

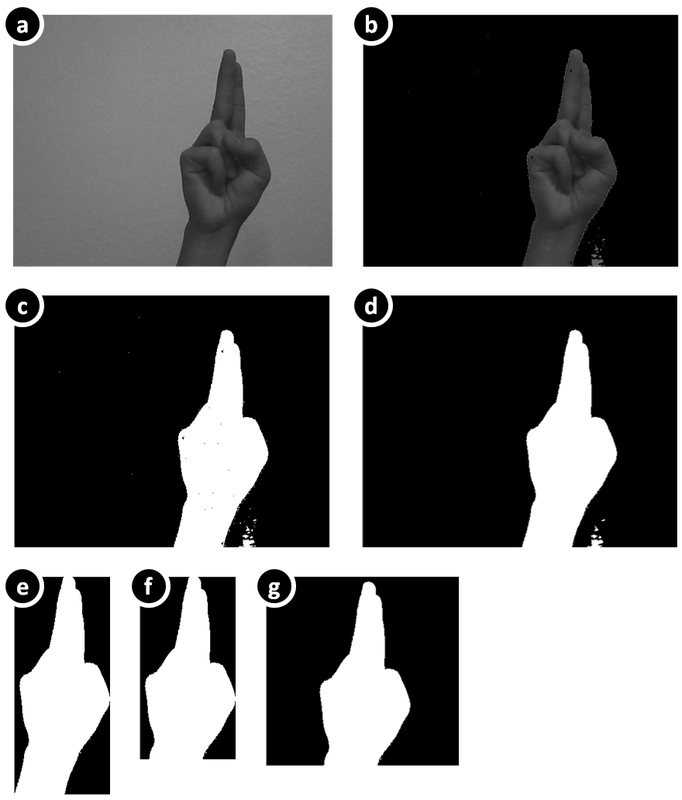

We normalize and scale the images to 20 x 20px, and use the binary pixels as features. See the figure for the image processing workflow, which includes background subtraction (b), binarizing (c), image cleanup (d,e) and normalizing (f,g).

We can successfully classify up to 93% of the signs using a linear or Gaussian kernel SVM, outperforming k-nearest neighbor classification by about 10%. Using a custom set of features we developed, we can successfully classify 81% of the signs with an SVM.

For more detail, see the final paper.